True Automation And Data Collection In Your Life.

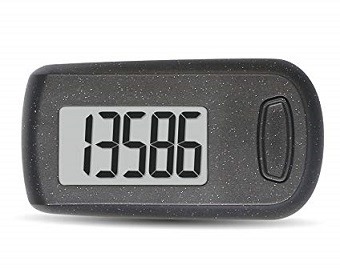

Wearables and fitness trackers were virtually non-existent five years ago. The nerds amongst us were using a physical pedometer to track our steps.

Fast forward five years and wearables are now a 25 billion dollar market. They are everywhere!

Even though most metric the wearables track hardly mean anything, wearable health trackers have at least proven that people love the idea of monitoring their health. I personally believe that automation and analytics involve more than just wearing a band and tracking your steps or even heart rate. I have talked about my fascination for automation here.

For me effective automation must satisfy a couple of simple criteria before it can become a part of my life:

True automation is transparent.

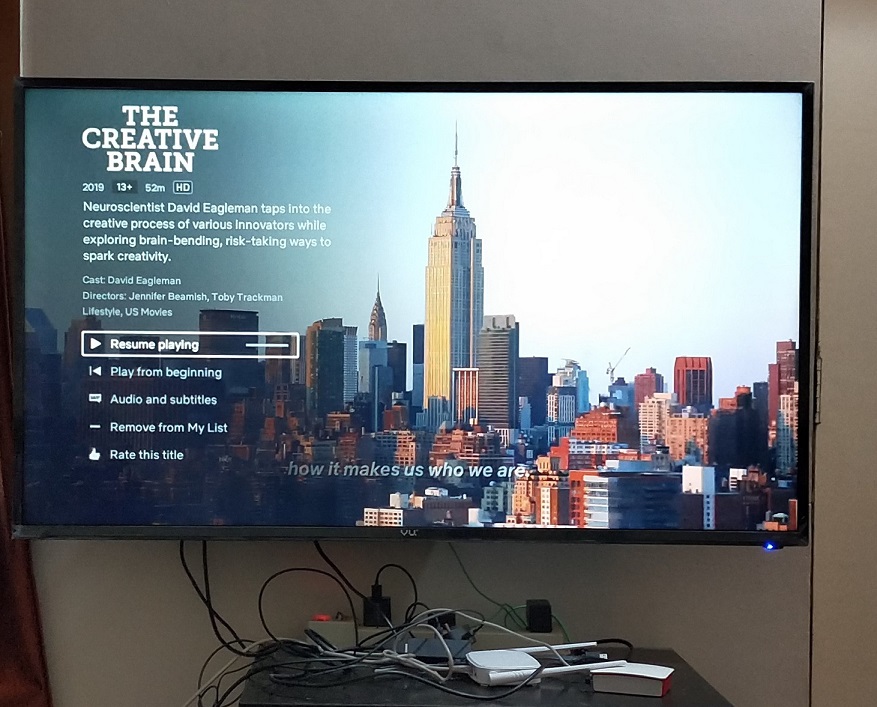

It’s one of the biggest reasons why I don’t like wearables. If you have to check your watch five times every day to see how many steps you walked and stare at your heart rate every hour to infer your health from that, I don’t think you’re automating and tracking anything! All you are doing is losing touch with yourself and cultivating obsessions and anxiety.

That’s what the companies who make fitness trackers want you to do, just like social media companies want you to constantly engage on their platforms, your fitness tracker wants you to keep looking at it, dozens of times a day to get validation about your health and wellbeing. No wonder there are times when these devices fail and people get panic attacks.

You shouldn’t be constantly peeking at a watch or an app to get confirmation on how healthy you are. Your health is something you should be mindful of and your body should talk to you. Your health is something you should feel. You should be able to mindfully listen to your body.

Also, step count metric is a bad measure of fitness, the data you collect is hardly analyzed over long term and the automation of collecting that data using a band, is way too obtrusive.

True automation and data collection works silently. Without you even noticing it. You set it and you forget it.

Really, when the big tech giants of the world are collecting your data from your browsing history they aren’t constantly pinging and buzzing you. That is what makes their data collection so effective. It’s so silent, you don't even know it's happening.

When you work on collecting data about yourself, you need to have similar processes in place for automation and data collection. Also, not everyone needs to collect the same data either, which transitions us to our next point.

True Automation Is Personalized.

As a nerd who has run a half marathon and multiple 10k’s I understand that step count is a bad metric and means nothing. For me, the hours I spend working out is a much better metric than the number of steps I walked.

Collecting the number of steps actually messes me up! I see 16000 steps on a pedometer on most evenings and then I silently convince myself that I have done way more walking today than a regular person so I don't need to work out.

It's a lousy metric that is literally detrimental to my cardiovascular health and overall fitness. Every time I wear a band, the band convinces me that I don't need to work out and my workout sessions come down.

For me, simply counting the number of days I worked out in a month is a way better metric than my step count of every day for an entire year. The point? What matters to me, may not matter to you. True automation is personalized.

For example for me commute is a big deal. I like to hack my time and minimize the time I spend commuting to work and back. It’s such a big deal for me that I need to track and analyze that data. If you live close to your workplace and spend ten minutes walking to office, tracking commute might mean nothing for you.

Spam calls are a serious problem for me and I feel the need to automate blocking those because I literally get multiple spam calls a day. You may not be getting any and may not want to automate blocking those.

Similarly, since I moved away from the city my family lives in, the amount of time I spend talking to my parents and family back home is a big deal for me, so I track that.

Things that matter to everyone are different. Automation should be personalized and if you truly want to automate parts of your life, it's about time you put a bit of programming effort on your own customized automation, taking your own data in your own hand and pick up a few tools of automation that work for you.

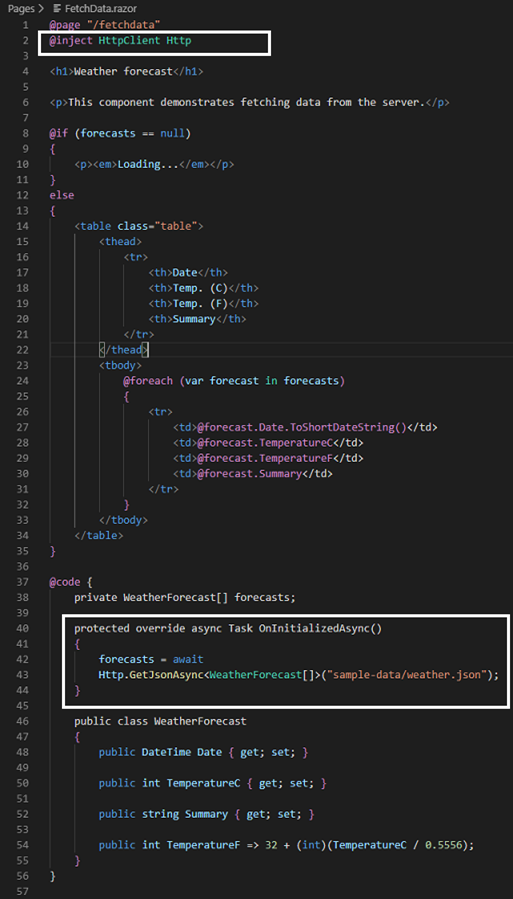

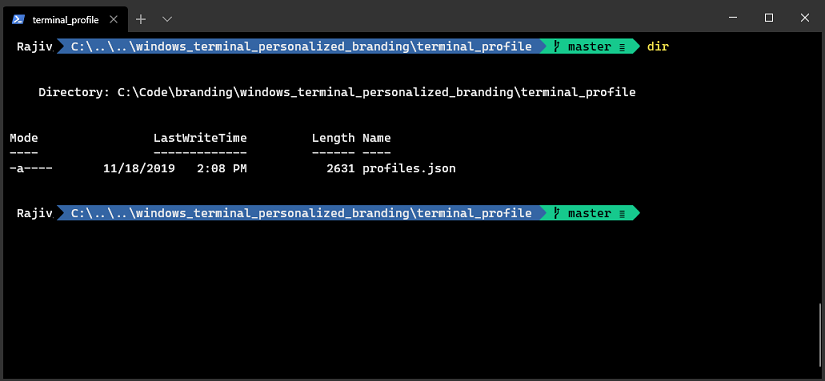

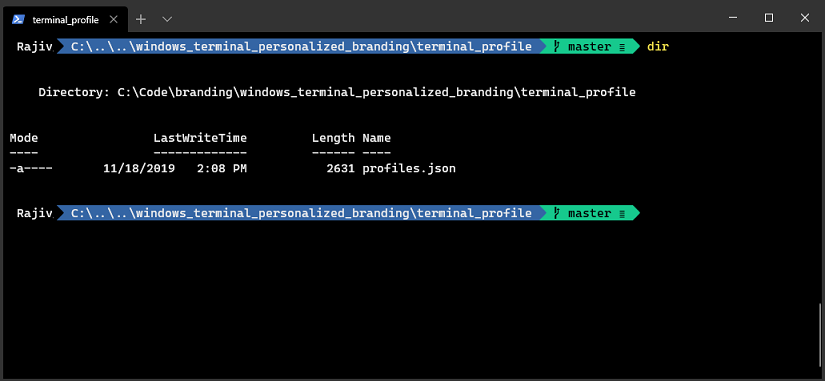

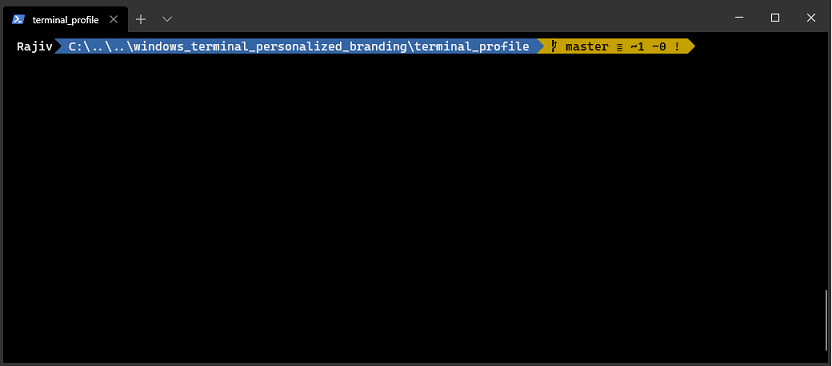

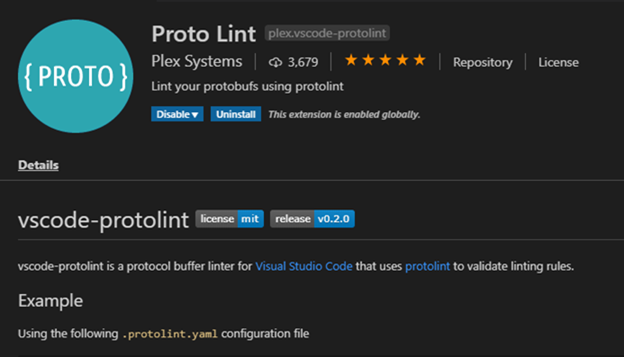

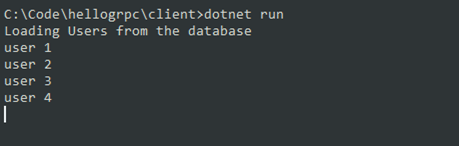

In this series of posts, I plan on showcasing how I personalize my automation and share some of the tools I use with you. Every tool I use eventually collects data about my activities and the time I spend. I’ll also show you how that data then pools into a centralized database that I own myself, which brings us to our next topic.

Good Automation Doesn’t Work In Isolation

What I eat has an impact on my mood. How much time I spend on the road actually has an impact on how efficient I am at work. How much sound sleep my wife gets has an impact on how many fights we have. :) Tracking an isolated item like the number of steps or heart rate literally means nothing.

When you start bringing a bunch of these random facts in a central database suddenly you start getting insights you never had before.

If you truly want to automate and analyze your life with data, you need to design and own a database of data points from your life that matter to you.

When you own your own data sets and when you design your own automation it makes it that much more easier for you to connect things and write smarter code and analytics to make sense of your data.

And The Point Of This Series Of Posts Is?

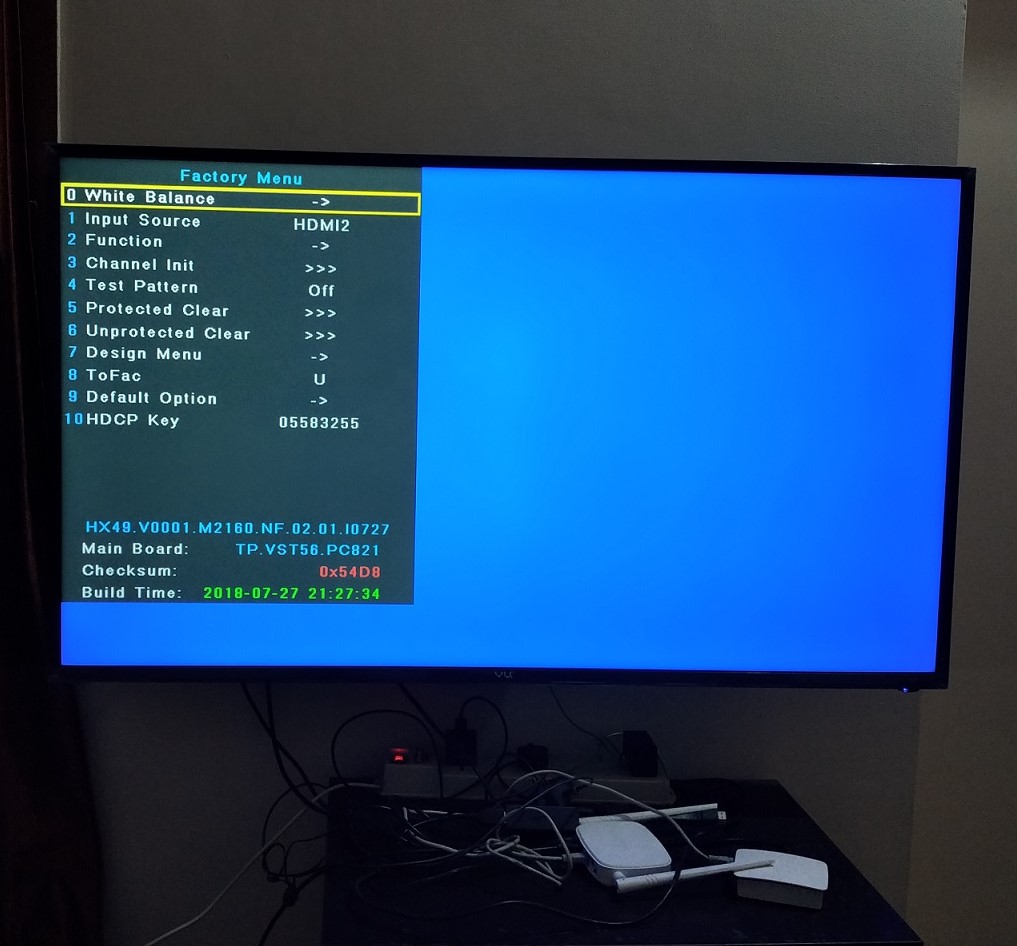

The idea I’m trying to share with you is that you need your own personalized automation and a database of data that really matters to you. I’ll be doing a series of posts here where I talk about things I automate and track in my own life.

In this series of posts, I plan on taking you through some simple automation tools and techniques to make you more effective and help you collect and analyze data about yourself and your loved ones.

We will use a bunch of random collection techniques I use and go through some of the fun automation I’ve set up around my life.

As nerds, most of us are excited about automation, machine learning, and data science but most folks learning it don't have any real project to work on it. Why not put it to use to automate and improve your own life?

Through this series of posts, I want to learn from you more than I want to teach you. Please use my techniques and tools if you like them and go build your own automation and intelligence around what matters most to you. Please use the comments generously or drop me an email to let me know the automation you are doing.

Think of this series of posts as nothing more than a nerd mucking around and having fun with some data and some code. And in the process, I hope to learn and share something meaningful and something useful with you.

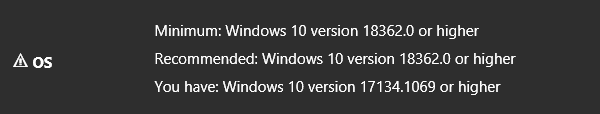

In the next post we’ll start with reducing your physic weight and using basic automation on your phone to get things that bother you out of your life. So watch out for this series of posts (or subscribe to this blog) for more on the topic of basic automation, machine learning and analytics to improve your life!

![]()

![]()

Comments are closed.