I love my test cases. But real test cases are so much more than just input output testing. Most folks who I see doing Unit test cases are doing them wrong by doing just some basic input output end to end tests.

If all you're looking to do is write some simple input output test cases to verify your Web API and check if they retain the same request response values across versions of the same API that you deploy so that you can do a quick regression, you shouldn't even be writing test cases.

Diffy from the twitter team actually does a better job than writing test cases manually if this is all you're trying to achieve. Diffy takes a slightly different take on input output testing. Instead of writing test cases, if you have a fully tested stable version of a micro service or WebAPI in production and are planning on rolling out another version of the same service; we'll call this new version the release candidate; Diffy can help automate a lot of basic regression testing without even having to write any test cases.

When you want to launch a new version of release candidate Diffy allows you to make the same call to both production and the release candidate and compare differences so you know if something is broken or what's different between the release candidate and the production version.

Here is a simple example of how you can put Diffy to use for testing WebAPI's without writing Test Cases Explicitly:

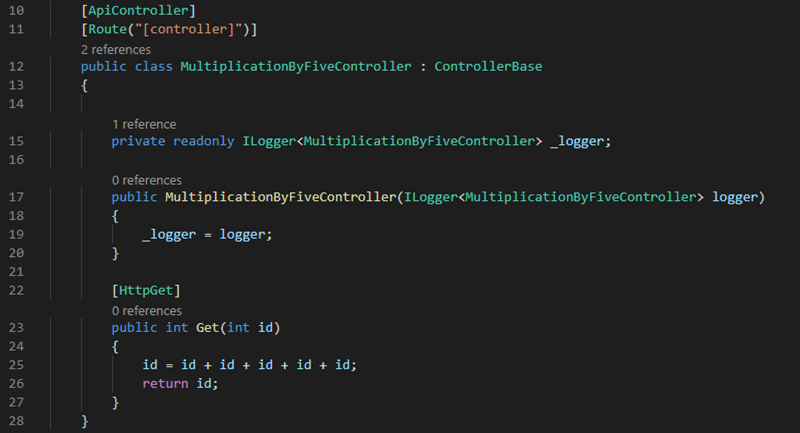

To keep things simple let's start with a simple WebAPI with one simple method which are are going to assume is hosted in production. Let's say all the service does is multiply a number by five. We've written the code in a strange convoluted way (by adding the same number five times) so that we can demonstrate refactoring and then testing again using Diffy. So to begin with our controller looks like this:

Let's call this service 1 and let's assume this is the version of the service now running in production:

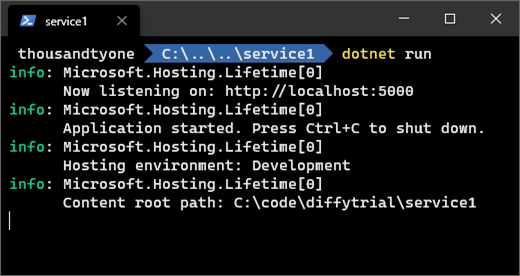

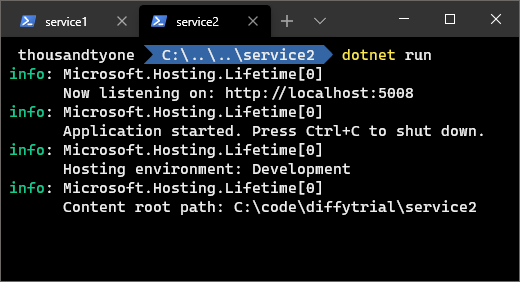

Now Let's go ahead and deploy another identical release candidate of the service in a different port this time. This time let's call it service 2:

Ok, so now we have two identical services running. One is in production and one is a Release Candidate. Now let's get Diffy up and running to see it' request and response models are identical. That will allow us to get started with some basic testing.

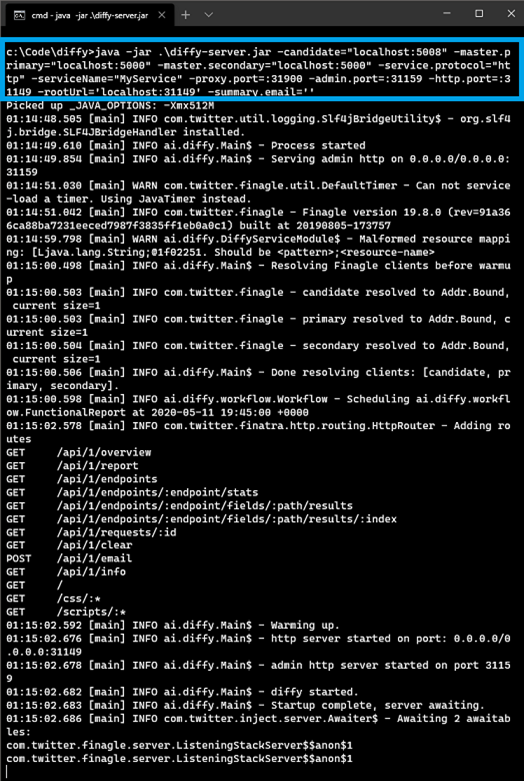

To get Diffy you can get the latest diffy-server.jar from here. Once I get the Diffy jar and place it on my machine, I just need to install Java on my machine and I can fire this command to get Diffy pointing to both my services so that it can start comparing them:

Command:

Command: java -jar .\diffy-server.jar -candidate="localhost:5008" -master.primary="localhost:5000" -master.secondary="localhost:5000" -service.protocol="http" -serviceName="MyService" -proxy.port=:31900 -admin.port=:31159 -http.port=:31149 -rootUrl='localhost:31149' -summary.email=''

Here Candidate is the service which is the release candidate; which basically means the newer version we are going to deploy and want to test.

Primary master is the service which is in production. Ideally, you would deploy two copies of the API in the production environment since Diffy does analysis of Request and Response objects and how they vary between two versions of production API allows it to filter noise. Let's say for example a particular attribute of the service in production was returning a random value each time, Diffy would see different values for these attributes being returned in two instance of production and understand that the specific attribute results in 'noise' and difference in values should be ignored. This second version of the production service is called the secondary master, but to keep things really simple let's keep both of those the same; which is why both primary and secondary URL in the above command point to the same endpoint.

Now if all does well, Diffy would have started a proxy where you can send the production traffic and Diffy will send the traffic to both production and Release Candidate to see if they have identical requests and responses. So since in the above command we asked Diffy to spawn a proxy on port 31900 let's send some traffic to that port and URL using postman:

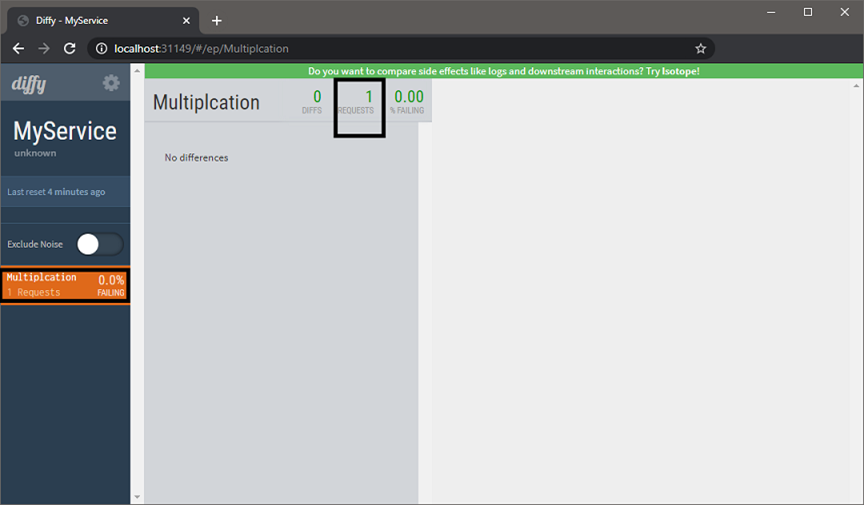

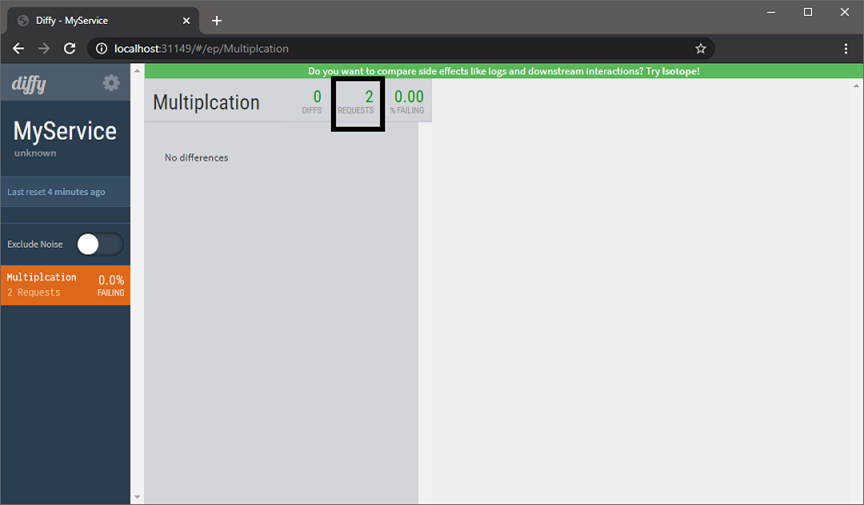

So I make call to the Diffy proxy exactly as if I am making a call to the service. I pass a value of 8 for the service to multiply by 5. I also add a Canonical Response header because that helps Diffy identify and group calls using that name (see the word "Multiplication" in the screenshot below? that's because of the canonical resource header) but that's not mandatory. If you don't do that Diffy just groups your calls under a group called "Unidentified Endpoint". Once I make that call that I can hit the Diffy console to see if everything went all right.

The above console shows that Diffy received a request which was routed to both the services and the difference in inputs and output of these services was zero (i.e. 0 diffs) and hence my release candidate is safe to deploy and continue to work with all production calls.

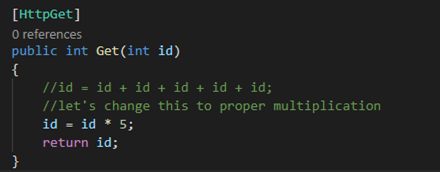

Now let's go to the Release candidate and change the internal logic from adding the same number five times to multiplying by five directly (remember we added the same number five them initially?):

Note that I've just refactored the code and made changes to the service internally but the outer signatures and request response associated with the release candidate is still the same. Now I want to quickly test the release candidate for regression to see if it's safe to send to production. So I make the same postman call I made earlier again! And once I make the same post man call again, I check the Diffy Dashboard:

Notice how Diffy now shows 2 calls, the second request is for the call we made after refactoring. However since the code changes were internal to the service, the request response elements are still identical so Diffy tells me none of my services are failing and it's still safe to take my release candidate to production. The refactoring I did is safe to deploy to production.

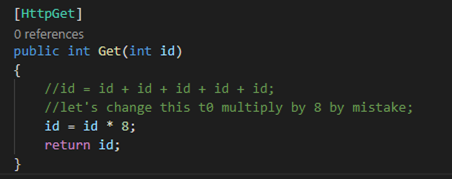

Now let's go ahead and make a mistake on purpose. Let's say instead of multiplying by 5 we end up multiplying by 8 in our release candidate and we do this by mistake.

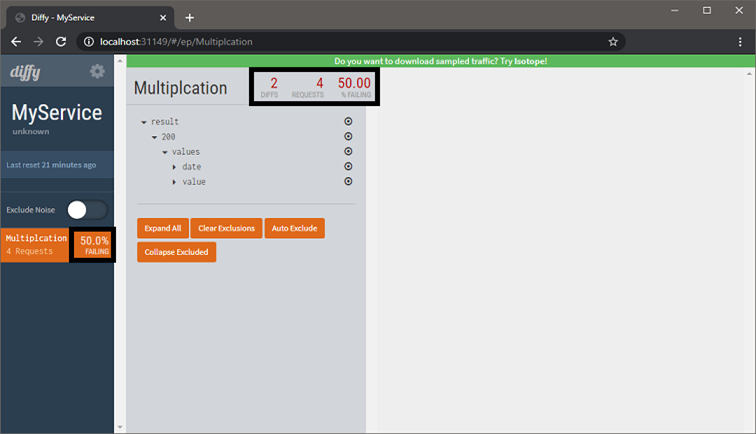

Now that we've made a mistake that effects the end output that the clients consuming the release candidate will notice and be effected by, let's see if Diffy can catch that. Lets make the same post man call a couple of additional times. Now I make two more post man calls on the proxy just to see how Diffy captures that:

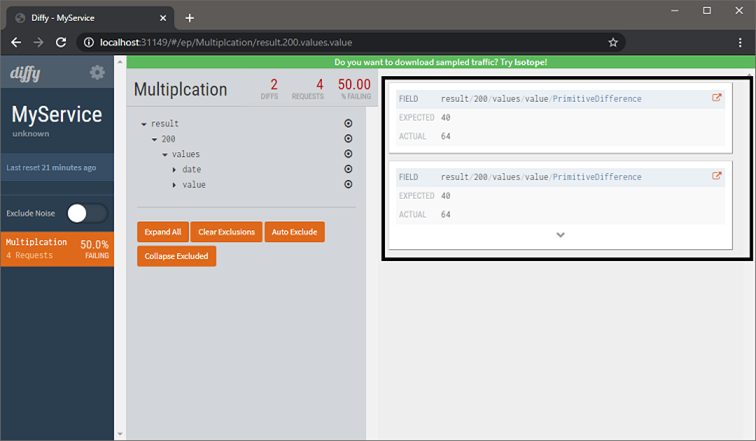

Because I made two more calls to the proxy, Diffy captured those calls, passed them to production and release candidate both and noticed they weren't behaving identically and hence started showing me those two calls as Diffs. 2 out of my 4 calls are considered failures and I have a failing rate of 50%.

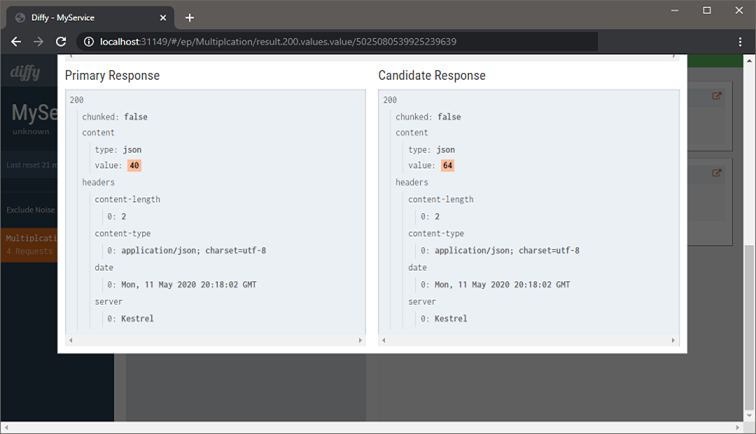

And just like Unit Test Results Diffy tells me what's wrong in the two calls that failed:

I can further drill down and compare my request and response objects too. If I had huge response objects it helps to see exactly where my production and release candidate response objects vary:

Like I said, this does not replace writing good Unit Test Cases or Test Driven Development, but if all you are interested in doing is writing basic input output test cases and all you need is basic peace of mind for some regression test cases, you don't need to write test cases just for that. Why not use Diffy instead?

Of course, this is just the tip of the iceberg and things get even more interesting where you can automatically take production traffic and route it to Diffy instead testing it explicitly. A lot of the testing we did here with Diffy can also be automated but that's for another day. For now this should tell you what Diffy is all about and get you playing with Diffy!

Go ahead and give Diffy a try. See if makes your automated input output testing or your basic integration testing that much simpler so you don't have to write and maintain test cases for basic micro service regression.

Comments are closed.