GRPC has been around for quite some time but it has recently been integrated into .NET Core 3.0 and the tooling support with it is just first class now.

If you write Rest WebAPI / Microservices using .NET Core, you send JSON data over HTTP requests. The service does its work and sends a JSON response back.

Till the time your request object reaches the service it waits and doesn’t begin processing. Then it does it’s work and sends you a response back. Till your browser or client gets the response back fully there is not much the client can do and basically waits. That’s the request-response model we’ve all grown up with.

We’ve had various takes on improving this basic design in the past. GRPC is Google’s take on solving the problem of making RPC calls and leveraging data streams compared to the standard request response model.

Without going into too much theory, GRPC uses Google’s Protocol buffers to generate code which then sends data using specialized streams which happens to be really fast and as the name suggests, allows streaming of both request and response objects.

Streams are better because you can use the data as it comes in. A crude example? Instead of downloading the whole video when you stream a video you can watch the video as it downloads. GRPC uses the same approach for data. If this doesn’t make sense, read on and by the time you’ve mucked around a bit with the code, it will all start making sense.

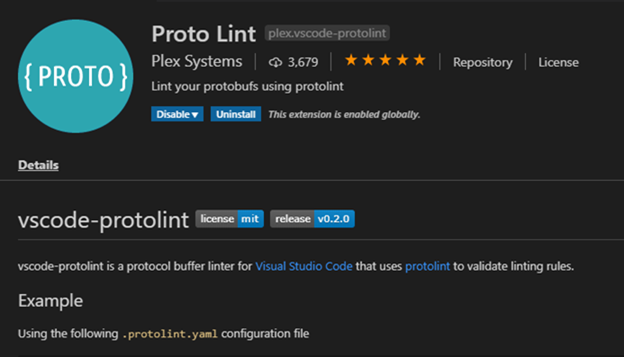

For this example we’ll use visual studio code. The tooling is much simpler with Visual Studio 2019 but I prefer to use Visual Studio Code as an IDE of choice because it shows me what’s going on under the hood. With visual studio code, I use following plugin for getting proto file syntax highlighting and support directly inside my IDE:

For syntax highlighting you can also use additional plugins like this one:

I have .NET Core 3 installed on my machine.

The first thing I do is:

- Generate a server project: This is like your Web API that is going to be consumed by the client.

- Generate the client project: This is your client that is going to consume the server and get the data by invoking an endpoint/method on the server.

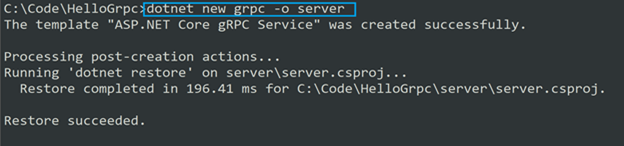

I generate the server-side project using:

The -o specifies the output path and creates a folder called 'server' where the GRPC service is generated.

I reference the following nugets by hopping into the terminal of VS Code:

Dotnet add package GRPC.Net.Client

Dotnet add package Google.Protobuf

Dotnet add package Grpc.Tools

Here are the repositories of these three nugets if you want to know more about them:

Once I've stubbed the code out and added the necessary packages to the project. I build the server using:

Dotnet Build

And then I open the code with VS Code.

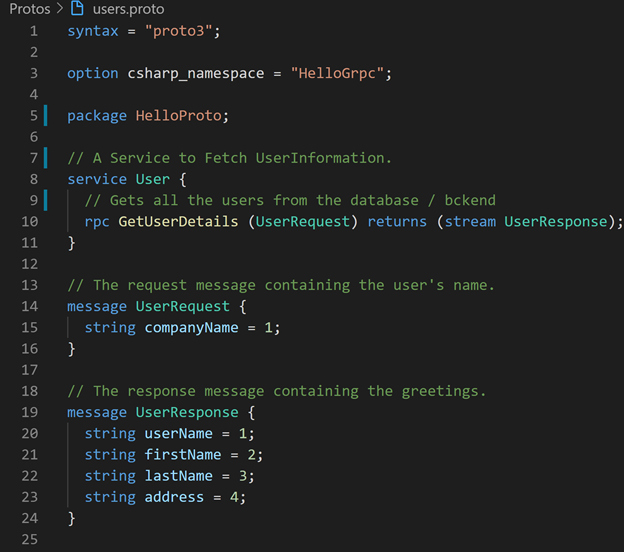

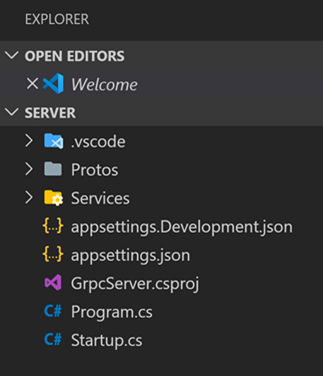

Notice the Protos folder? That has the proto files .NET tooling generated for us. Think of the proto files like your WSDL files if you come from a web service world. Proto files are specifications for your service. You write them by hand. You primarily use them to describe your request objects, response objects and your methods. Here is the example of the proto file that I wrote:

The above proto file basically says:

- I have a request object with the “companyName” attribute that is ordered 1 in the list of attributes. This is the request object because I will be passing the company name whose users I want to fetch.

- I have a response object with these attributes: userName, firstName, lastName and address. The numbers next to them is the order in which these attributes will be serialized.

- I have a method that takes a company name and streams back the list of users to the client. This is indicated by: “rpc GetUserDetails (UserRequest) returns (stream UserResponse);” line of code that you see in the above screenshot.

The GetUserDetails it the method that accepts a user request and returns a stream of UserResponse. (By default, a stream would be an array of objects that would be streamed to the client).

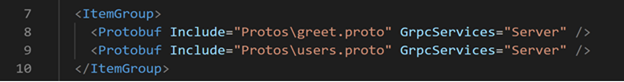

Every time I add a .proto file I add it to the servers project (.csproj) file:

Once I’ve done that, I fire the build and Google Tooling nugets generates the c# files for me in the background to actually generate the real request and response classes. With Visual Studio 2019 this tooling is hidden under the hood. With VS code the tooling fires when you build your project using the “Dotnet build” command.

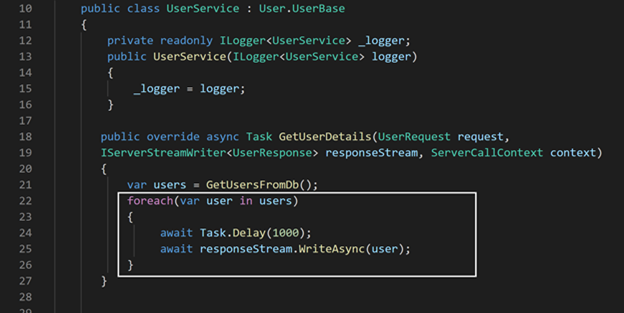

Once I have the stubs I can write the service. In the service, I fetch some hard-coded values from a function. Typically, I would do this fetching from a database/service but for now, let’s keep this simple and focus on GRPC.

Once I fetch the data I just push the data back to the client but instead of sending the data in a response object that is pushed to the client all at once and waiting for the client to "download" the response, I use GRPC to stream the data one user at a time back to the client:

Typically, I would have just returned the users I get from GetUserFromDb back to the client but that would generate a regular response and I want to stream the users back to the client so I write them asynchronously to the response stream. Also notice the Task.Delay? I do that to simulate any delays that might actually be happening on the server as you process and return each user. This shows that each user that is processed is streamed back to the client even as the server continues it’s processing with additional users.

Each user that I write to the stream now flows back to the client and the client can start doing whatever it wants to do with it rather than waiting for the whole response to complete.

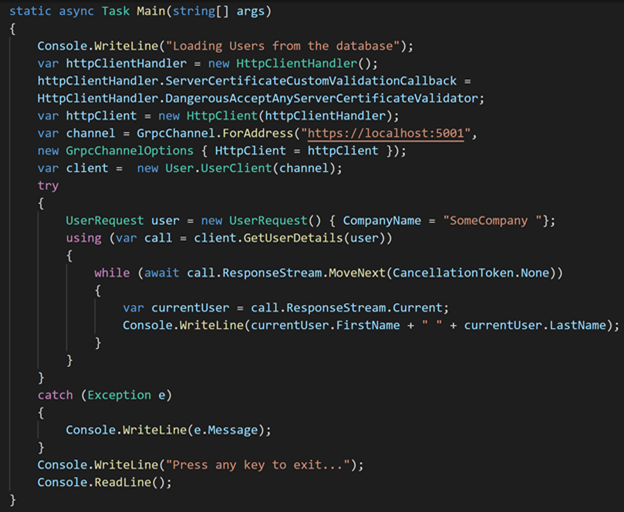

On the client-side, I write a simple .NET Console Application that makes a call to the server. The only thing the client needs to generate code to call the server is a copy of the proto files which contains the specs for the entire service. You would send your proto files to your clients or publish them somewhere.

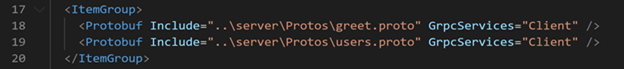

I copy the same proto files on the client side and include them in my client project as “Client” files. Here is how I modify the project (.csproj) file:

I modify my client project to include a copy of the same .proto files and then I can fire a build. This generates all the stubs I need on the client-side to call the server.

Once this is done I start writing the client.

Notice how I am using the Dangerous Accept Any Server Side Certificate Validator in the code above? That’s just for non-production because I am running this without any valid SSL certificate. In your production you would get a real certificate.

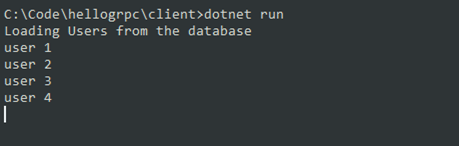

See how I am using the while loop to iterate through the response stream? This allows me to get each user from the stream as the server writes to the stream. And once I get the current item from the stream? Well, I am just showing each user on the console as soon as the server processes the user and writes the user object to the stream.

Now when I run the client the client calls the server, starts listening to the stream for response and starts dealing with partial responses as and when these are streamed by the server.

This is cool, because:

- The response is streamed over a channel that is much more optimized compared to JSON data being sent over HTTP using Rest. There are posts that seem to suggest that GRPC is 7x to 10x faster than JSON over rest.

- I can do the same streaming I did on the response object while receiving data, even when I send data using the request object. So, if you want to send huge data to the server but don't want to wait till the entire data is sent before the server starts processing it, GRPC works for that too. Put simply, it supports two-way streaming.

The post is long, but the actual implementation is tiny and super simple. If you’ve not tried GRPC before I highly recommend downloading the entire sample project I described in this post from here (it’s listed under the HelloGrpc folder) and running the server first and then the client and mucking around with the code.

Given the level at which Visual Studio Code and Visual Studio tooling for GRPC is right now, I personally think it’s really easy to pick up and most Web API developers will benefit from having this additional arrow in their quiver.

If you are a web developer who writes APIs and who cares about performance and payloads, you should care about newer better ways of communication between servers and clients compared to the traditional rest based WebAPIs that send data over JSON.

We moved from XML to JSON because the payloads were smaller in JSON. GRPC is the natural next step for smaller payloads, better compression and two way streaming of data.

Go on, give it a try. It’s super easy and well worth the few minutes you will invest in learning it. Chances are you can put it to good use right away and see huge gains in performance and end-user experience.

Comments are closed.